| December 11, 2020 |

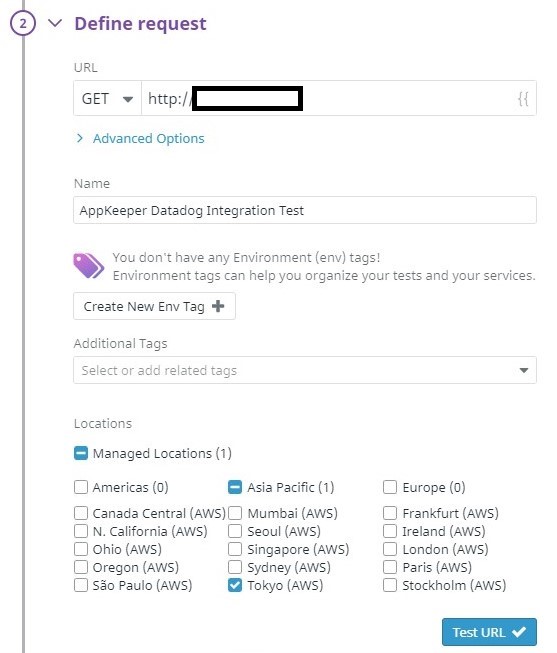

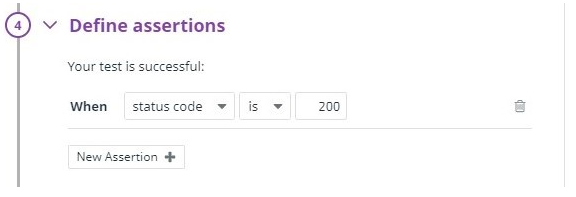

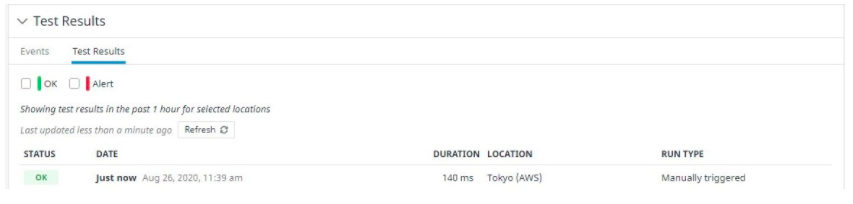

Using Datadog for Amazon EC2 Monitoring? Pair with SIOS AppKeeper for Automated Remediation |

| December 8, 2020 |

5 Signs That It Will Take More Than A Blog Post To Fix Your High Availability

5 Signs That It Will Take More Than A Blog Post To Fix Your High AvailabilityThe signs are there. The warning lights are flashing. In your gut, you can sense it. Maybe you can’t sleep. Your problems with high availability are deep. But, maybe you are not quite sure. 1. If you think your cloud SLA is all you need for high availabilityCloud solutions have provided great advancements in increased hardware availability and resilience. However, application high availability requires more than just selecting the right hypervisor or cloud provider. Your strategy for high availability cannot stop with the SLA provided by the cloud or a virtualization provider. As quoted by Wired, “The almost four-day Amazon outage of April 2011 did not breach Amazon’s EC2 SLA, which as a FAQ explains, “guarantees 99.95% availability of the service within a Region over a trailing 365 period.” In this DZone article, our own David Bermingham breaks down the differences between cloud SLAs and application availability in detail. If you want a highly available infrastructure, it must include monitoring, recovery, and resilience at the data and application layers as well. 2. If you are just using the high availability clustering that came with your open source operating systemIf so, then chances are you didn’t select your database based on what was bundled with the OS, so why would you select your HA solution based on that criteria alone. Bundled tools go a long way in providing extra assurance, possibilities, and capabilities. However, despite the ease of access, bundled tools and OS clustering software are not always capable of meeting your SLA, RPO, RTO, and availability requirements. If your enterprise has a combination of Operating Systems, your team will likely need help navigating different tools and understanding how they integrate together. It’s kind of like choosing the hedge clippers and push reel mower left on the curb to shape “Azalea” on the 13th hole par 5 (at Augusta). Both lawn mowers are designed to cut grass but how much time do you have? How are you going to handle the complexity? Which would you trust? Your strategy for high availability requires more than just considering the conveniences of what is bundled with the OS, otherwise, you’d be running MySQL instead of SAP HANA. 3. If you think that enterprise application licensing, such as SQL Enterprise or Oracle Enterprise, is the same thing as enterprise high availabilityIn addition to increased cost, many enterprise application licenses also increase the ability of the application to recover in some high availability scenarios. However, it is highly unlikely that your entire enterprise is based on a single application. Your high availability is going to require more than just a highly available database solution. You’ll need an enterprise grade application monitoring and recovery solution with a breadth of support for all of your applications and databases. In addition, you’ll need the ability to manage and replicate not just database data, but critical application and configuration data as well. Availability for a single database or a simple application is one thing – but HA for a complex, multipart application and supporting database is very different. More services, more parts that need to be coordinated, more complex architecture to orchestrate, more specific best practices to adhere to before, during and after failover/switchover. More than what your enterprise license paid for. 4. If your downtime is growing and your uptime is shrinkingThe pace of life is ever increasing in many fields. When was the last time your team recovered from backup, manually restarted the applications that were deemed critical, or restarted a set of failed virtual machines or nodes? The pace of your outage events cannot continue to outpace sustainability, or your team’s ability to move beyond firefighting to fire prevention and fire proofing. “You can only run so hard so long (Carey Nieuwhof).” For some of you, you’ve been firefighting for too long, and your outages are becoming more common than your up-time. 5. If your first failover test was on the production serverA recent client remarked that it is simply impossible to test for every possible disaster scenario. As new software is created, deployed, updated, and patched the challenges in higher availability are increasing. But, your live, production data is not the place to find out what does not play well together. And while Go-Live and Post-Go-Live will always have their share of surprises, the inability to actually failover and run on the backup node should not be one of them. Scouring blogs can provide you with helpful tips and insights to define, redefine, and improve your higher availability. But, if the warning signs are going off that you’ve traded true availability for some semblance of ‘just enough’, then it will take more than a blog post, or scouring every blog post in the availability world for that matter, to fix your HA. – Cassius Rhue, Vice President, Customer Experience Reproduced with permission from SIOS |

| November 27, 2020 |

9 Signs You Have an Application Availability Problem |

| November 12, 2020 |

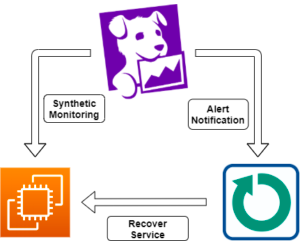

APM Automation – The Missing Ingredient For Application Performance Monitoring Solutions

|

| October 25, 2020 |

Six Reasons Not to Buy SIOS High Availability Software . . . If You Dare |