| January 25, 2022 |

How to Protect Application and Databases – Oracle Clustering |

| January 21, 2022 |

How to Protect Application and Databases – SAP Clustering |

| January 18, 2022 |

How to Protect Application and Databases – SQL Server Clustering |

| January 13, 2022 |

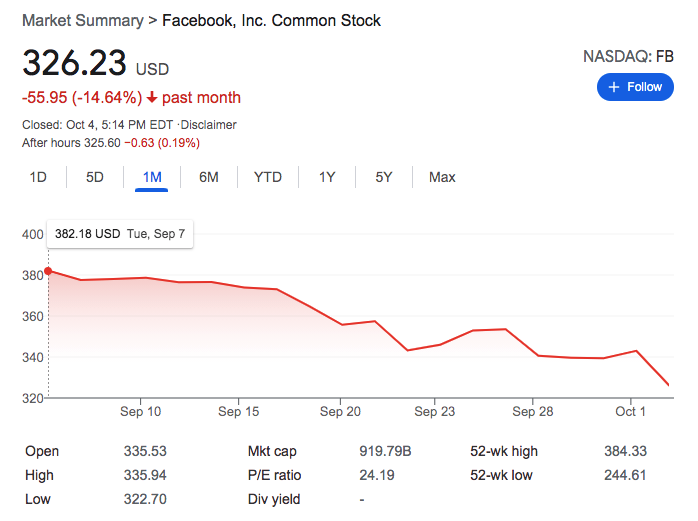

Why You Need Business Continuity Plans |

| January 9, 2022 |

Fixing Your Cloud Journey |