| February 20, 2023 |

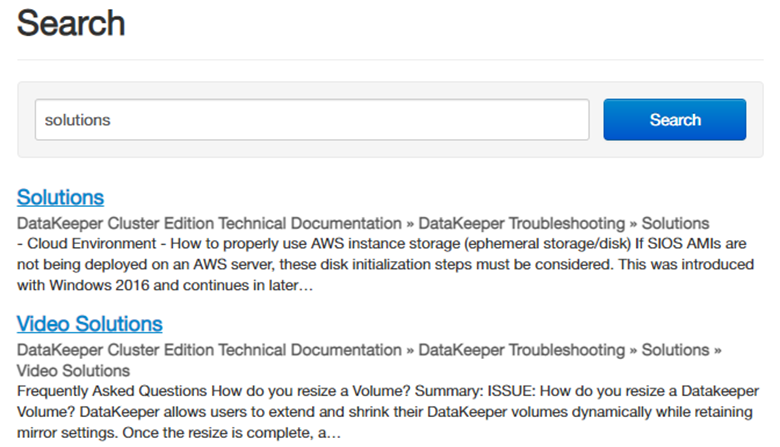

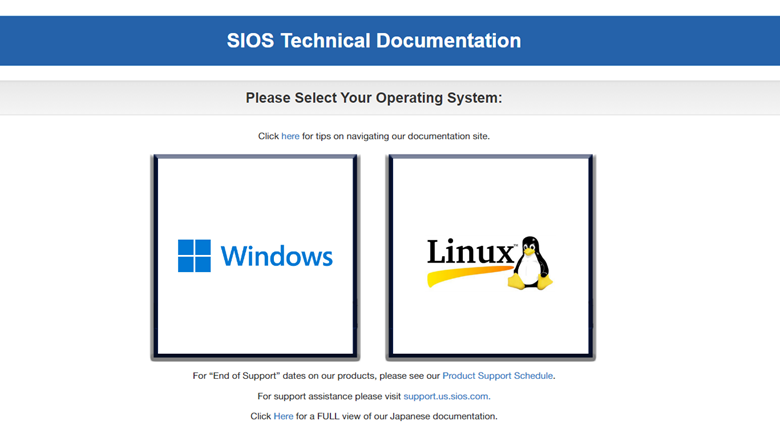

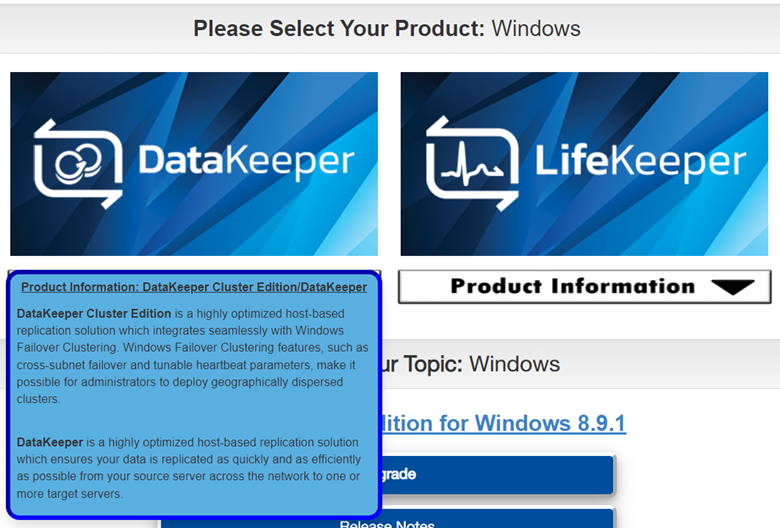

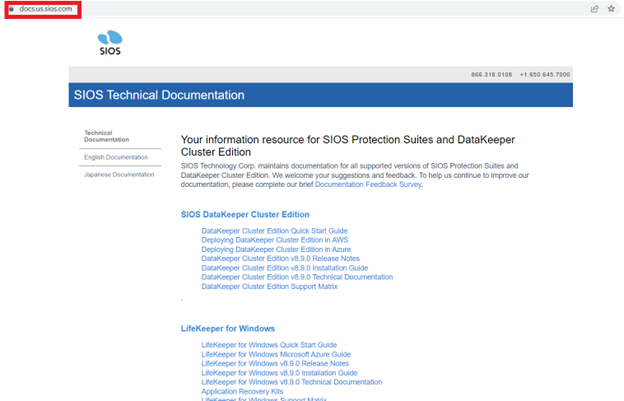

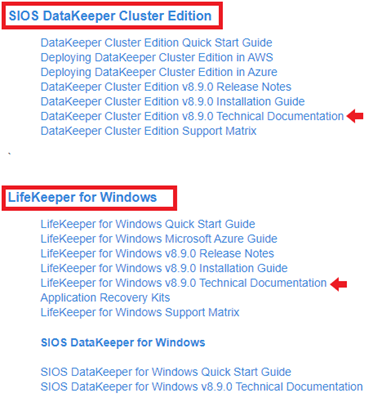

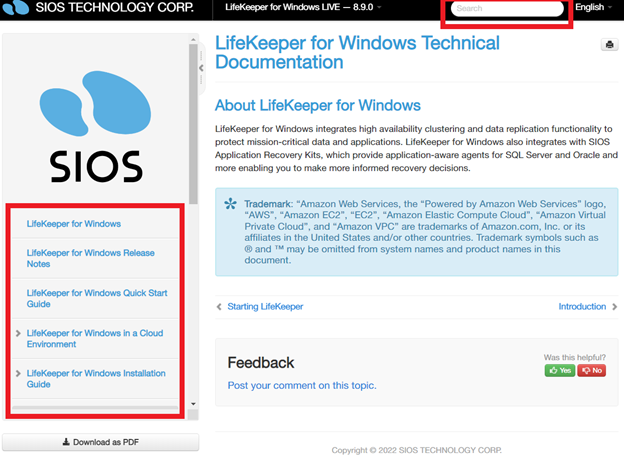

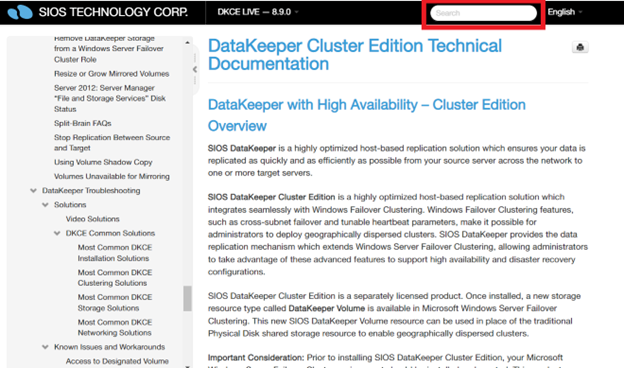

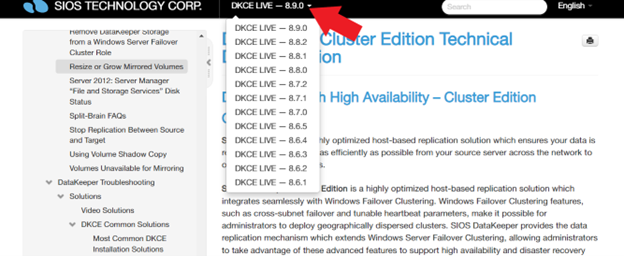

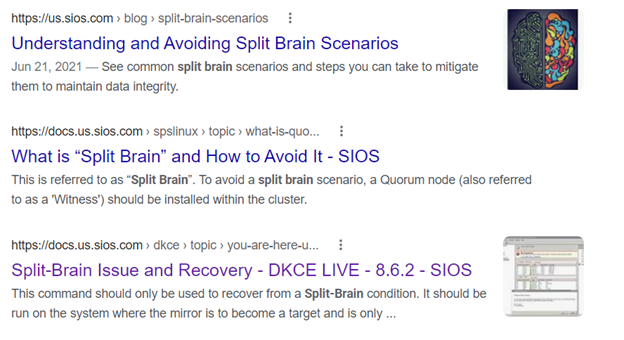

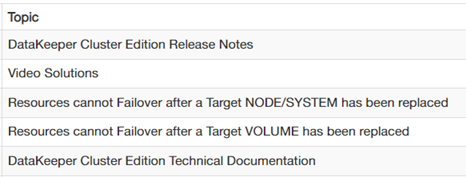

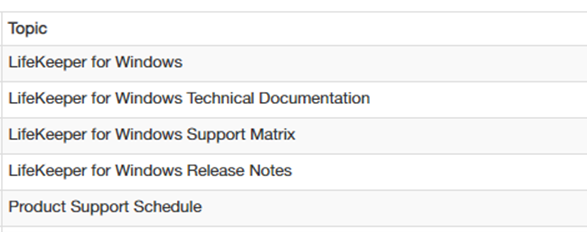

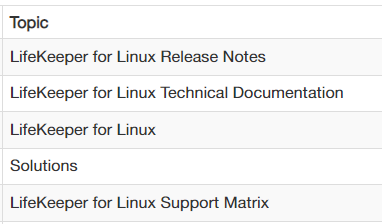

New SIOS Documentation Site |

| February 16, 2023 |

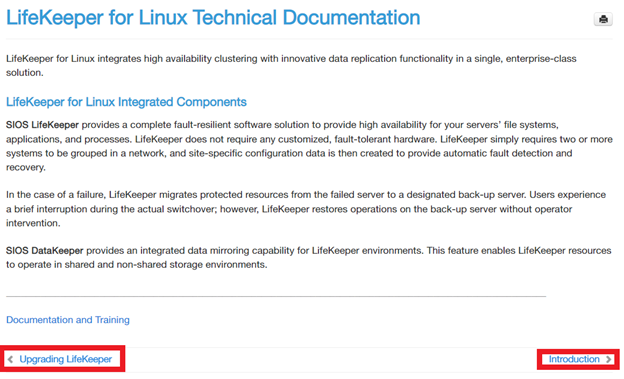

How to Get Started Successfully with SIOS Documentation |

| February 12, 2023 |

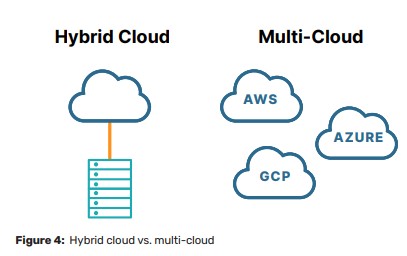

Multi-Cloud High Availability for Business-Critical Applications |

| February 8, 2023 |

Video: SIOS DataKeeperVideo: SIOS DataKeeperReproduced with permission from SIOS |

| February 4, 2023 |

Video: SIOS LifeKeeperVideo: SIOS LifeKeeperReproduced with permission from SIOS |