Date: November 30, 2018

Tags: create a 2 node mysql cluster without shared storage, data replication, High Availability, MySQL

Step-by-Step: How To Create A 2-Node MySQL Cluster Without Shared Storage, Part 2

The previous post introduced the advantages of running a MySQL cluster, using a shared-nothing storage configuration. We also began walking through the process of setting up the cluster, using data replication and SteelEye Protection Suite (SPS) for Linux. In this post, we complete the process to Create a 2-Node MySQL Cluster Without Shared Storage. Let’s get started.

Creating Comm Paths

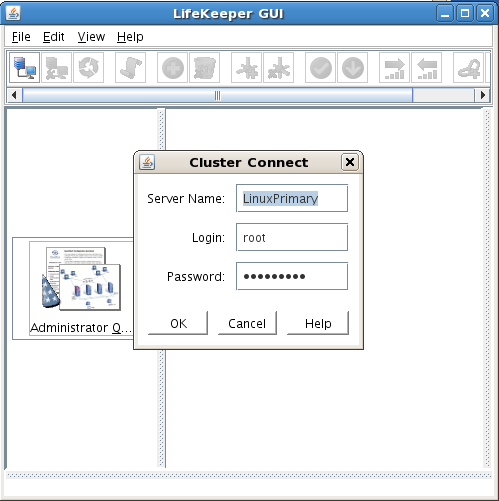

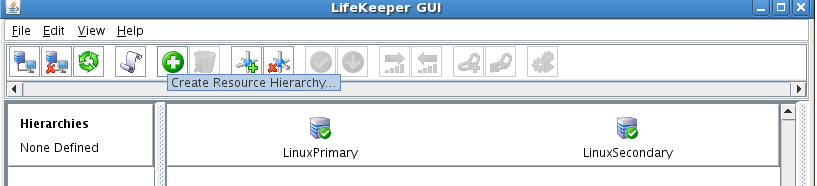

Now it’s time to access the SteelEye LifeKeeper GUI. LifeKeeper is an integrated component of SPS for Linux. The LifeKeeper GUI is a Java-based application that can be run as a native Linux app or as an applet within a Java-enabled Web browser. (The GUI is based on Java RMI with callbacks, so hostnames must be resolvable or you might receive a Java 115 or 116 error.)

To start the GUI application, enter this command on either of the cluster nodes: /opt/LifeKeeper/bin/lkGUIapp & Or, to open the GUI applet from a Web browser, go to http://<hostname>:81.

The first step is to make sure that you have at least two TCP communication (Comm) paths between each primary server and each target server, for heartbeat redundancy. This way, the failure of one communication line won’t cause a split-brain situation. Verify the paths on the primary server. The following screenshots walk you through the process of logging into the GUI, connecting to both cluster nodes, and creating the Comm paths.

Step 1: Connect to primary server

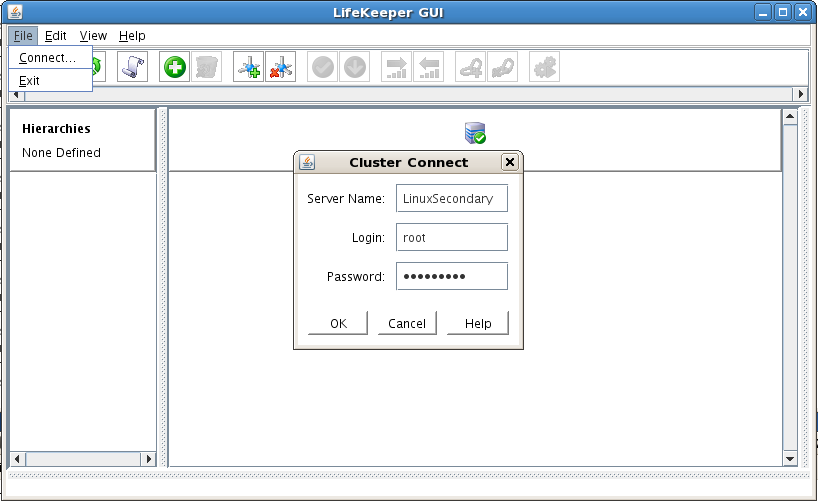

Step 2: Connect to secondary server

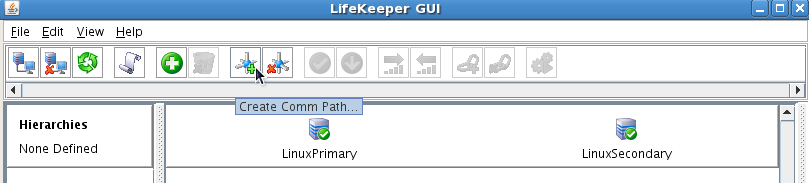

Step 3: Create the Comm path

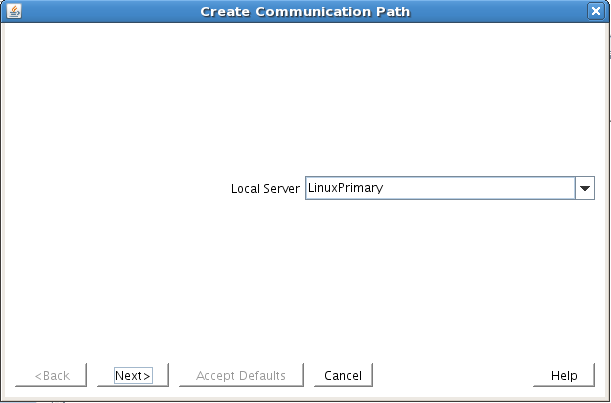

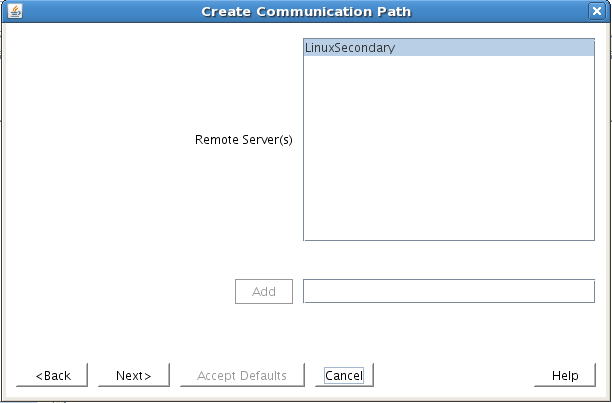

Step 4: Choose the local and remote servers

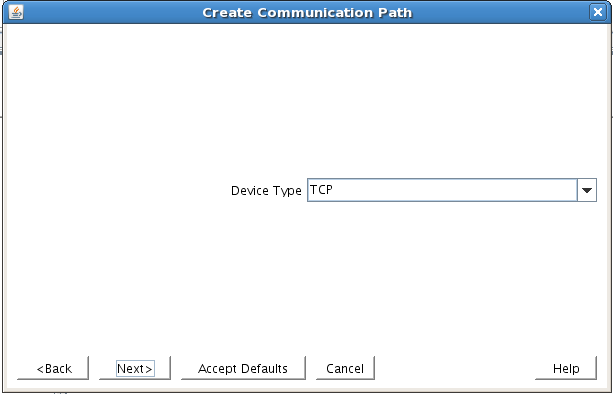

Step 5: Choose device type

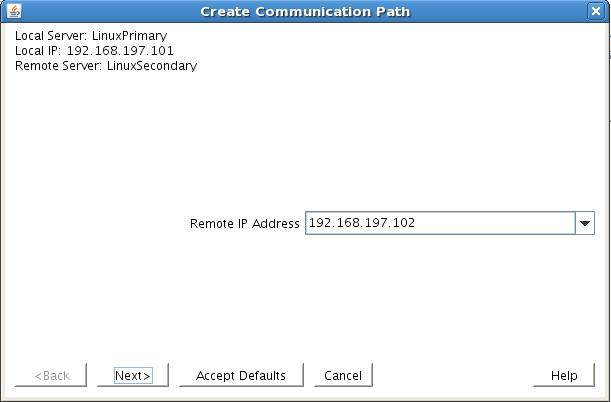

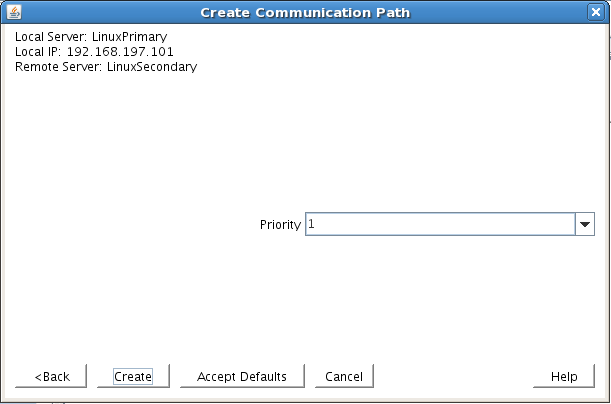

Next, you are presented with a series of dialogue boxes. For each box, provide the required information and click Next to advance. (For each field in a dialogue box, you can click Help for additional information.)

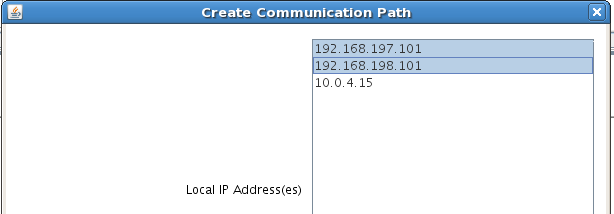

Step 6: Choose IP address for local server to use for Comm path

Step 7: Choose IP address for remote server to use for Comm path

Step 8: Enter Comm path priority on local server

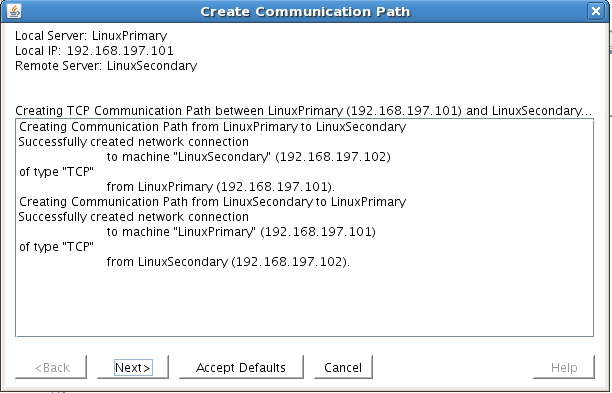

After entering data in all the required fields, click Create. You’ll see a message that indicates that the network Comm path was successfully created.

Step 9: Finalize Comm path creation

Click Next. If you chose multiple local IP addresses or remote servers and set the device type to TCP, then the procedure returns you to the setup wizard to create the next Comm path. When you’re finished, click Done in the final dialogue box. Repeat this process until you have defined all the Comm paths you plan to use.

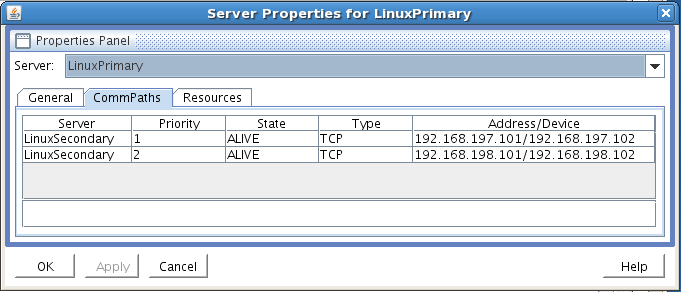

Verify that the communications paths are configured properly by viewing the Server Properties dialogue box. From the GUI, select Edit > Server > Properties, and then choose the CommPaths tab. The displayed state should be ALIVE. You can also check the server icon in the right-hand primary pane of the GUI. If only one Comm path has been created, the server icon is overlayed with a yellow warning icon. A green heartbeat checkmark indicates that at least two Comm paths are configured and ALIVE.

Step 10: Review Comm path state

Creating And Extending An IP Resource

In the LifeKeeper GUI, create an IP resource and extend it to the secondary server by completing the following steps. This virtual IP can move between cluster nodes along with the application that depends on it. By using a virtual IP as part of your cluster configuration, you provide seamless redirection of clients upon switchover or failover of resources between cluster nodes because they continue to access the database via the same FQDN/IP.

Step 11: Create resource hierarchy

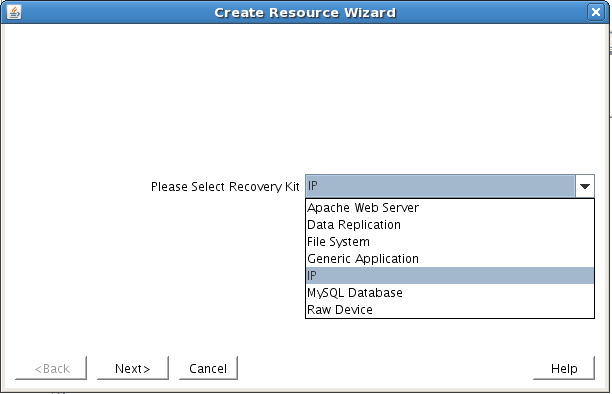

Step 12: Choose IP ARK

Enter the appropriate information for your configuration, using the following recommended values. (Click the Help button for further information.) Click Next to continue after entering the required information.

|

Field |

Tips |

| Resource Type | Choose IP Address as the resource type and click Next. |

| Switchback Type | Choose Intelligent and click Next. |

| Server | Choose the server on which the IP resource will be created. Choose your primary server and click Next. |

| IP Resource | Enter the virtual IP information and click Next.(This is an IP address that is not in use anywhere on your network. All clients will use this address to connect to the protected resources.) |

| Netmask | Enter the IP subnet mask that your TCP/IP resource will use on the target server. Any standard netmask for the class of the specific TCP/IP resource address is valid. The subnet mask, combined with the IP address, determines the subnet that the TCP/IP resource will use and should be consistent with the network configuration.This sample configuration 255.255.255.0 is used for a subnet mask on both networks. |

| Network Connection | Enters the physical Ethernet card with which the IP address interfaces. Chose the network connection that will allow your virtual IP address to be routable. Choose the correct NIC and click Next. |

| IP Resource Tag | Accept the default value and click Next. This value affects only how the IP is displayed in the GUI. The IP resource will be created on the primary server. |

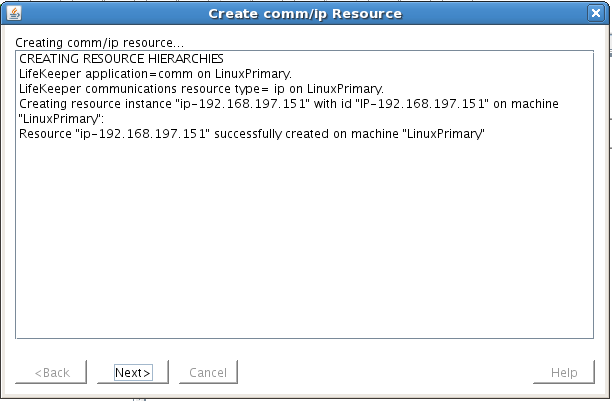

LifeKeeper creates and validates your resource. After receiving the message that the resource has been created successfully, click Next.

Step 13: Review notice of successful resource creation

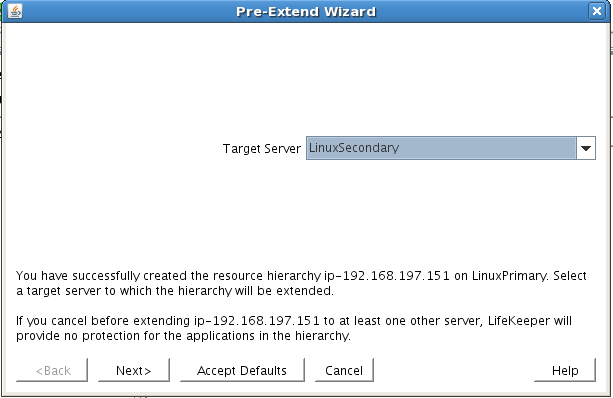

Now you can complete the process of extending the IP resource to the secondary server.

Step 14: Extend IP resource to secondary server

The process of extending the IP resource starts automatically after you finish creating an IP address resource and click Next. You can also start this process from an existing IP address resource, by right-clicking the active resource and selecting Extend Resource Hierarchy. Use the information in the following table to complete the procedure.

|

Field |

Recommended Entries or Notes |

| Switchback Type | Leave as intelligent and click Next. |

| Template Priority | Leave as default (1). |

| Target Priority | Leave as default (10). |

| Network Interface | This is the physical Ethernet card with which the IP address interfaces. Choose the network connection that will allow your virtual IP address to be routable. The correct physical NIC should be selected by default. Verify and then click Next. |

| IP Resource Tag | Leave as default. |

| Target Restore Mode | Choose Enable and click Next. |

| Target Local Recovery | Choose Yes to enable local recovery for the SQL resource on the target server. |

| Backup Priority | Accept the default value. |

After receiving the message that the hierarchy extension operation is complete, click Finish and then click Done.

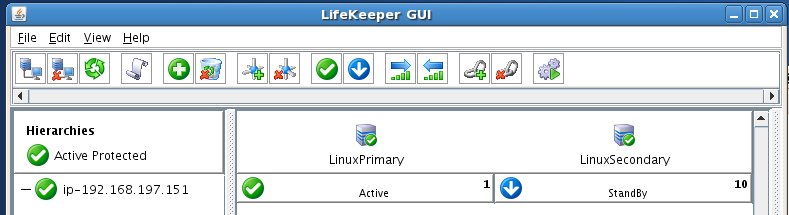

Your IP resource (example: 192.168.197.151) is now fully protected and can float between cluster nodes, as needed. In the LifeKeeper GUI, you can see that the IP resource is listed as Active on the primary cluster node and Standby on the secondary cluster node.

Step 15: Review IP resource state on primary and secondary nodes

Creating A Mirror And Beginning Data Replication

Halfway to Create a 2-Node MySQL Cluster Without Shared Storage! You’re ready to set up and configure the data replication resource, which you’ll use to synchronize MySQL data between cluster nodes. For this example, the data to replicate is in the /var/lib/mysql partition on the primary cluster node. The source volume must be mounted on the primary server, the target volume must not be mounted on the secondary server, and the target volume size must be equal to or larger than the source volume size.

The following screenshots illustrate the next series of steps.

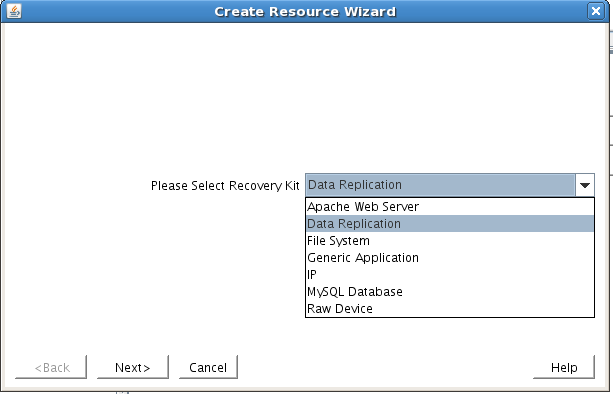

Step 16: Create resource hierarchy

Step 17: Choose Data Replication ARK

Use these values in the Data Replication wizard.

|

Field |

Recommended Entries or Notes |

| Switchback Type | Choose Intelligent. |

| Server | Choose LinuxPrimary (the primary cluster node or mirror source). |

| Hierarchy Type | Choose Replicate Existing Filesystem. |

| Existing Mount Point | Choose the mounted partition to replicate; in this example, /var/lib/mysql. |

| Data Replication Resource Tag | Leave as default. |

| File System Resource Tag | Leave as default. |

| Bitmap File | Leave as default. |

| Enable Asynchronous Replication | Leave as default (Yes). |

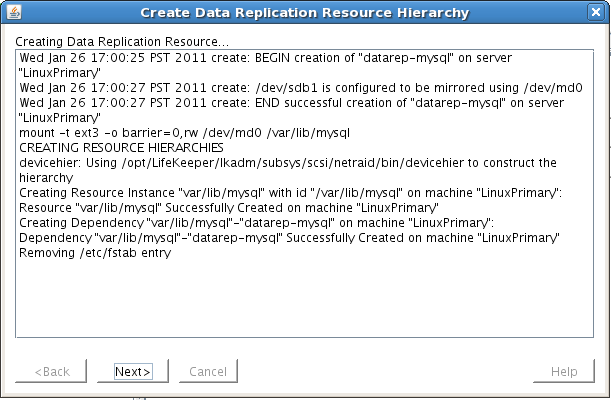

Click Next to begin the creation of the data replication resource hierarchy. The GUI will display the following message.

Step 18: Begin creation of Data Replication resource

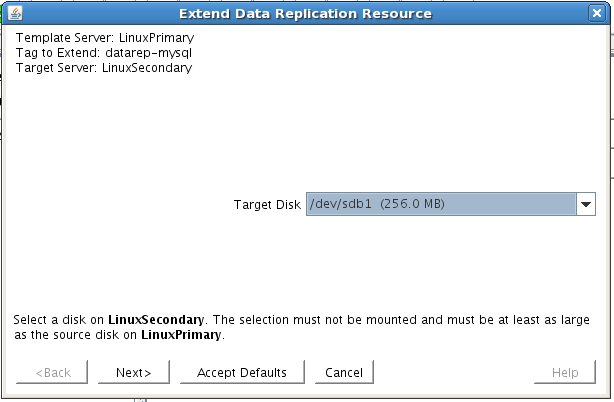

Click Next to begin the process of extending the data replication resource. Accept all default settings. When asked for a target disk, choose the free partition on your target server that you created earlier in this process. Make sure to choose a partition that is as large as or larger than the source volume and that is not mounted on the target system.

Step 19: Begin extension of Data Replication resource

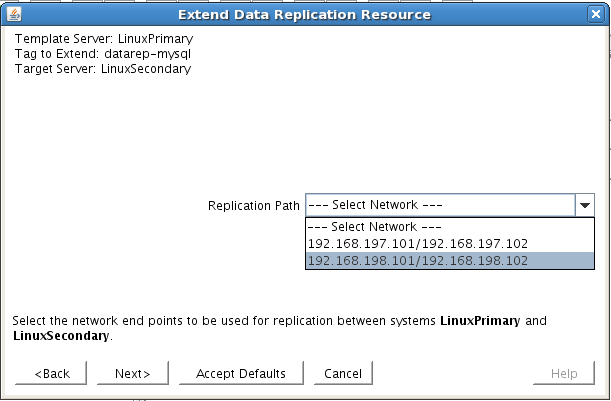

Eventually, you are prompted to choose the network over which you want the replication to take place. In general, separating your user and application traffic from your replication traffic is best practice. This sample configuration has two separate network interfaces, our “public NIC” on the 192.168.197.X subnet and a “private/backend NIC” on the 192.168.198.X subnet. We will configure replication to go over the back-end network 192.168.198.X, so that user and application traffic is not competing with replication.

Step 20: Choose network for replication traffic

Click Next to continue through the wizard. Upon completion, your resource hierarchy will look like this:

Step 21: Review Data Replication resource hierarchy

Creating The MySQL Resource Hierarchy

You need to create a MySQL resource to protect the MySQL database and make it highly available between cluster nodes. At this point, MySQL must be running on the primary server but not running on the secondary server.

From the GUI toolbar, click Create Resource Hierarchy. Select MySQL Database and click Next. Proceed through the Resource Creation wizard, providing the following values.

|

Field |

Recommended Entries or Notes |

| Switchback Type | Choose Intelligent. |

| Server | Choose LinuxPrimary (primary cluster node). |

| Location of my.cnf | Enter /var/lib/mysql. (Earlier in the MySQL configuration process, you created a my.cnf file in this directory.) |

| Location of MySQL executables | Leave as default (/usr/bin) because you’re using a standard MySQL install/configuration in this example. |

| Database tag | Leave as default. |

Click Create to define the MySQL resource hierarchy on the primary server. Click Next to extend the file system resource to the secondary server. In the Extend wizard, choose Accept Defaults. Click Finish to exit the Extend wizard. Your resource hierarchy should look like this:

Step 22: Review MySQL resource hierarchy

Creating The MySQL IP Address Dependency

Next, you’ll configure MySQL to depend on a virtual IP (192.168.197.151) so that the IP address follows the MySQL database as it moves.

From the GUI toolbar, right-click the mysql resource. Choose Create Dependency from the context menu. In the Child Resource Tag drop-down menu, choose ip-192.168.197.151. Click Next, click Create Dependency, and then click Done. Your resource hierarchy should now look like this:

Step 23: Review MySQL IP resource hierarchy

At this point in the evaluation, you’ve fully protected MySQL and its dependent resources (IP addresses and replicated storage). Test your environment, and you’re ready to go.

You can find much more information and detailed steps for every stage of the evaluation process in the SIOS SteelEye Protection Suite for Linux MySQL with Data Replication Evaluation Guide. To download an evaluation copy of SPS for Linux, visit the SIOS website or contact SIOS at info@us.sios.com.

Interested to learn to Create a 2-Node MySQL Cluster Without Shared Storage, here’s our past success stories with satisfied clients.

Reproduced with permission from Linuxclustering